For nearly 2 decades I have been working on integration of data and systems across many different customers both Federal and Commercial alike. In 2009, I founded ArganoMS3 to address enterprise integration and API enablement needs as interconnectivity was sorely lacking across many customers and systems. During this time we were enamored by Service Oriented Architecture (SOA) and the promises that the strategists stated it would bring. Quickly, we realized that philosophy and reality tend to clash on a regular basis and what was said would make things easy and manageable instead made systems fragile and prone to failure because the interconnectivity portion of SOA had not matured enough to ensure service reliability and system uptime.

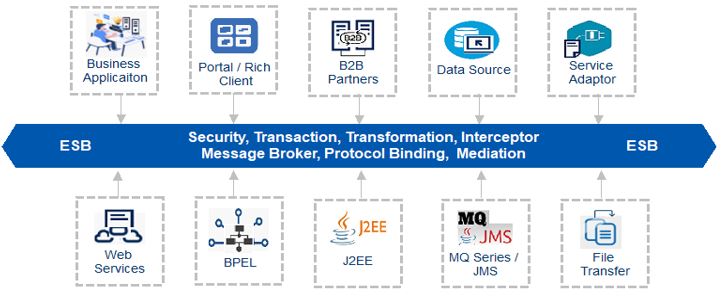

As the tools started to catch up with the philosophy users began using message brokers like ActiveMQ and WMQ to establish reliability patterns and Staged Event Driven Architecture (SEDA). These systems were generally using SOAP messaging which allowed for contract-first development strategies which was a catapult into the right direction for providing true interoperability between systems. This also gave way to the rise of the Enterprise Service Bus (ESB) and products like Oracle Service Bus and MuleSoft who appeared to provide a layer of process ahead of the SOAP services. However, SOAP soon was believed to be too big and bulky and was not friendly enough for developers, so they started to look for the next generation of integration technologies.

Twenty years after the Y2K scare and engineers are scrambling to bring APIs online for customers and many product companies are scrambling to transform their ESB’s into an API Platform to meet the demand. So what is an API? In the early 2000s and before an Application Programming Interface (API) was a way to have native languages leverage or invoke a library using methods that were built into the product to make it perform a function and return an expected response. In 2021, when you hear API you know that it has become synonymous with Representational State Transfer (REST) based APIs. REST-based APIs bring a lot to the table in regards to connectivity. They have well-defined methods, response codes to address many different scenarios, support for multiple types of data and the only thing that is required is the utilization of the HTTP/1.1 protocol for communication.

So we have a great approach and great strategy so what’s the problem? The problem comes with the evolution of enterprises and their data. As of 2017, over 3 years ago, IBM stated that 90% of the world’s data was created in the previous 2 years. With more and more connected devices we are creating more and more data each and every second. And that is where the problem lies with the current API strategy. HTTP/1.1 is not designed to manage and send huge data sizes between systems. The amount of hardware that is needed to support the increasing volumes of data has single-handedly created Cloud providers such as AWS, GCP, and Azure.

So where do we go and how do we get there?

There are so many topics to dive into on how to handle this question but in this blog, we will focus on what I call a service connectivity platform and one of the leading companies in this space is Kong!

As much as it may surprise you, Kong is leading the way in the world of service connectivity platforms. They are the first product company to address multiple layers of connectivity within a single product and with increasing agility, are taking on even more needs. Kong, however, is frequently incorrectly classified as just an API gateway by many organizations and evaluators such as Forrester because they have not established a service connectivity platform category, and frankly, there just aren’t enough products out there to even compete against Kong. So what is a service connectivity platform?

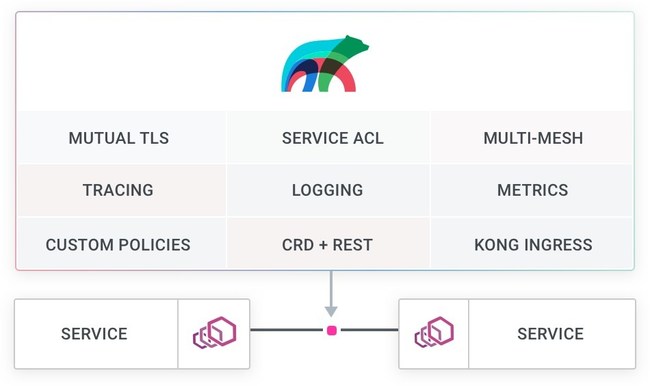

A service connectivity platform is a collection of tools that address multiple needs in a cloud native world such as ingress and egress control, API management, API gateway, security, and service mesh. The service connectivity platform leverages these components to accelerate the communications between the different services and provides a number of different approaches and patterns for implementation that historically was not possible. For example, Kong provides the ability to do a standard legacy API gateway approach similar to other products like APIGEE and 3-Scale. The legacy API gateway approach is like a beefy reverse proxy that applies some rules before sending the request to the service to be fulfilled. There is a head controller, and all traffic has to pass through this location. This can become a choke point in a large enterprise and doesn’t support modern distributed systems. This approach would generally require additional load balancing and networking to manage a modern architecture. What Kong can do that these other API platforms cannot is implement a point of service enforcement approach to architecture. This is where Kong management and policy enforcement occur inside the POD next to the service and distributes the workload across a cluster. In addition, the service is portable and doesn’t require any new service or security strategies in the host platform where it may be relocated to. Lastly, Kong supports modern communication using protobuf, gRPC, and graphQL to give flexibility to the API implementers, unlike other platforms such as Mulesoft and Tibco, which only support HTTP/1.1 protocols.

Next is the service mesh capabilities that Kong brings to the table. With a native communication channel between the ingress controller, the API manager, and the service mesh, the speed at which data can get onto the mesh’s data plane is accelerated. In addition, with a simple plugin, the ability to enable tracing from the point of entry across the entire platform is possible giving a modern observability feature that other integration platforms don’t have. OpenTracing / OpenTelemetry is an industry-standard that is built into the Kong platform so it allows you to use different visualization tools for a single pane of glass. The service mesh not only gives you better insight, but it provides more service resiliency and a higher level of security with mTLS built right in. The standard certificate rotation capabilities ensure that your platform never has an expired certificate and that communication is never interrupted. Kong Mesh doesn’t just provide value inside of the cluster like other service mesh technology. Kong has taken this another step to aid in future-proofing enterprises because they know that not all applications, especially in a large enterprise, have container management for legacy systems. For that reason, Kong Mesh supports VMs as well and provides all of the same capabilities outside of clusters to applications that are located within a VM, bringing the connectivity of the enterprise to a new level.

So, let’s get back to the big data point. In the past three (3) years the data has increased and it was estimated that 1.7MB of data per second is created for every person on Earth in 2020. If we factor in the pandemic it’s probably nearly 40% higher than that. That is 8 billion people producing 1.7MB+ of data per second. This information is collected and stored and with an interconnected enterprise many systems cannot keep up. They have periods of downtime where data gets out of sync and all the consumers of that information need to be brought back up to date when things come back online. HTTP/1.1 and REST APIs are not able to manage this much data and keep up with its day to day message-driven delivery. Because of this many organizations are actually going backward in technology capabilities and architectural approaches by setting up FTP and File-based data synchronization processes to support the message-driven API data transfers.

While the regression in architectural patterns is true for most enterprises and ABSOLUTELY true for Government agencies, some companies that are leading the digital edge are taking a different approach. As mentioned above, Kong supports multiple protocols, including Kafka and gRPC, which runs on HTTP/2 standard. The HTTP/2 protocol is the next generation of HTTP that is a very fast streaming protocol. Organizations such as Chick-fil-A, Netflix, Square, and our favorite company chat engine SLACK are using gRPC to keep up with huge volumes of data that need to traverse their enterprises. In addition, these companies are leveraging service mesh and cloud native applications running in containerization platforms as mentioned above to round out their service connectivity platforms. These technologies are what keeps their tools up and running 24x7x365 with nearly no downtime. They are not built on legacy ESB systems using Legacy API gateways and HTTP/1.1. They have advanced because they needed to. The rest of the industry has started to realize these new technologies and the needs they have within their enterprise and starting to adopt them as well.

So is this the end of REST APIs? Absolutely not! Just look we still have some systems out there using COBOL and Fortran. However, the next generation of enterprise systems is here and it’s built around implementing and using a service connectivity platform, and with that, it looks like Kong is ahead of the rest and leading the way

About the Author: Aaron Weikle

FOUNDER & CEO OF MS3

Aaron has been a though leader in the API enablement and Enterprise Integration space for nearly 2 decades. His innovation solutions while working in the Public sector has saved millions of US tax payer dollars while enabling the government to function at a more efficient pace.

Not only has the Public sector benefit from Aaron’s innovative solutions but he has played major roles in major Digital transformation efforts in the Private sector as well. His participation with solutions such as IoT at Chik-fil-a, API enablement at McDonalds and eCommerce implementation at Ralph Lauren has brought his solutions to global markets.